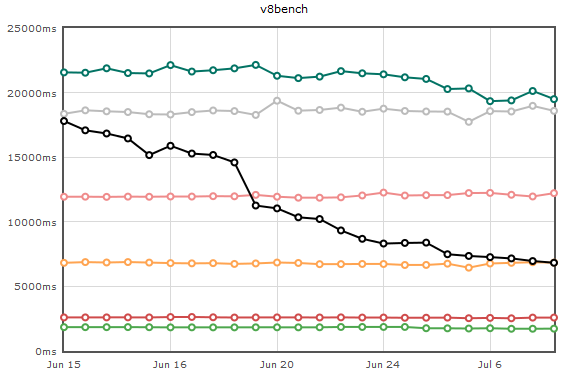

On July 12th, JägerMonkey officially crossed TraceMonkey on the v8 suite of benchmarks. Yay! It’s not by a lot, but this gap will continue to widen, and it’s an exciting milestone.

A lot’s happened over the past two months. You’ll have to excuse our blogging silence – we actually sprinted and rewrote JägerMonkey from scratch. Sounds crazy, huh? The progress has been great:

The black line is the new method JIT, and the orange line is the tracing JIT. The original iteration of JägerMonkey (not pictured) was slightly faster than the pink line. We’ve recovered our original performance and more in significantly less time.

What Happened…

In early May, Dave Mandelin blogged about our half-way point. Around the same time, Luke Wagner finished the brunt of a massive overhaul of our value representation. The new scheme, “fat values”, uses a 64-bit encoding on all platforms.

We realized that retooling JägerMonkey would be a ton of work. Armed with the knowledge we’d learned, we brought up a whole new compiler over the next few weeks. By June we were ready to start optimizing again. “Prepare to throw one away”, indeed.

JägerMonkey has gotten a ton of new performance improvements and features since the reboot that were not present in the original compiler:

- Local variables can now stay in registers (inside basic blocks).

- Constants and type information propagate much better. We also do primitive type inference.

- References to global variables and closures are now much faster, using more polymorphic inline caches.

- There are many more fast-paths for common use patterns.

- Intern Sean Stangl has made math much faster when floating-point numbers are involved – using the benefits of fat values.

- Intern Andrew Drake has made our JIT’d code work with debuggers.

What about Tracer Integration?

This is a tough one to answer, and people are really curious! The bad news is we’re pretty curious too – we just don’t know what will happen yet. One thing is sure: if not carefully and properly tuned, the tracer will negatively dominate the method JIT’s performance.

The goal of JägerMonkey is to be as fast or faster than the competition, whether or not tracing is enabled. We have to integrate the two in a way that gives us a competitive edge. We didn’t do this in the first iteration, and it showed on the graphs.

This week I am going to do the simplest possible integration. From there we’ll tune heuristics as we go. Since this tuning can happen at any time, our focus will still be on method JIT performance. Similarly, it will be a while before an integrated line appears on Are We Fast Yet, to avoid distraction from the end goal.

The good news is, the two JITs win on different benchmarks. There will be a good intersection.

What’s Next?

The schedule is tight. Over the next six weeks, we’ll be polishing JägerMonkey in order to land by September 1st. That means the following things need to be done:

- Tinderboxes must be green.

- Everything in the test suite must JIT, sans oft-maligned features like E4X.

- x64 and ARM must have ports.

- All large-scale, invasive perf wins must be in place.

- Integration with the tracing JIT must work, without degrading method JIT performance.

For more information, and who’s assigned to what, see our Path to Firefox 4 page.

Performance Wins Left

We’re generating pretty good machine code at this point, so our remaining performance wins fall into two categories. The first is driving down the inefficiencies in the SpiderMonkey runtime. The second is identifying places we can eliminate use of the runtime, by generating specialized JIT code.

Perhaps the most important is making function calls fast. Right now we’re seeing JM’s function calls being upwards of 10X slower than the competition. Its problems fall into both categories, and it’s a large project that will take multiple people over the next three months. Luke Wagner and Chris Leary are on the case already.

Lots of people on the JS team are now tackling other areas of runtime performance. Chris Leary has ported WebKit’s regular expression compiler. Brian Hackett and Paul Biggar are measuring and tackling what they find – so far lots of object allocation inefficiencies. Jason Orendorff, Andreas Gal, Gregor Wagner, and Blake Kaplan are working on Compartments (GC performance). Brendan is giving us awesome new changes to object layouts. Intern Alan Pierce is finding and fixing string inefficiencies.

During this home stretch, the JM folks are going to try and blog about progress and milestones much more frequently.

Are We Fast Yet Improvements

Sort of old news, but Michael Clackler got us fancy new hovering perf deltas on arewefastyet.com. wx24 gave us the XHTML compliant layout that looks way better (though, I’ve probably ruined compliance by now).

We’ve also got a makeshift page for individual test breakdowns now. It’s nice to see that JM is beating everyone on at least *one* benchmark (nsieve-bits).

Summit Slides

They’re here. Special thanks to Dave Mandelin for coaching me through this.

Conclusion

Phew! We’ve made a ton of progress, and a ton more is coming in the pipeline. I hope you’ll stay tuned.